Nikhil Prasad Fact checked by:Thailand Medical News Team Jan 12, 2026 1 month, 2 weeks, 2 days, 8 hours, 8 minutes ago

Medical News: Google is facing fierce scrutiny after a Guardian investigation revealed that its newly deployed AI Overviews tool had been feeding incorrect and dangerously misleading health information to millions of users. The AI feature sits at the top of Google search pages and is marketed as a way to instantly summarize essential answers without clicking links. Yet when it comes to health data, the stakes are life and death, and experts warn the rollout has veered into alarming territory.

A Guardian probe forces Google to yank flawed AI health summaries over safety fears.

A Guardian probe forces Google to yank flawed AI health summaries over safety fears.

Image Credit: StockShots

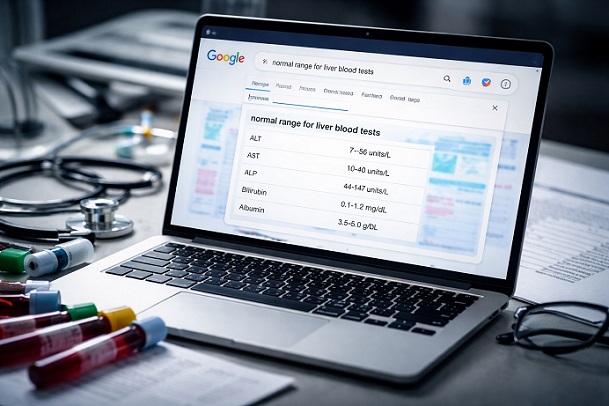

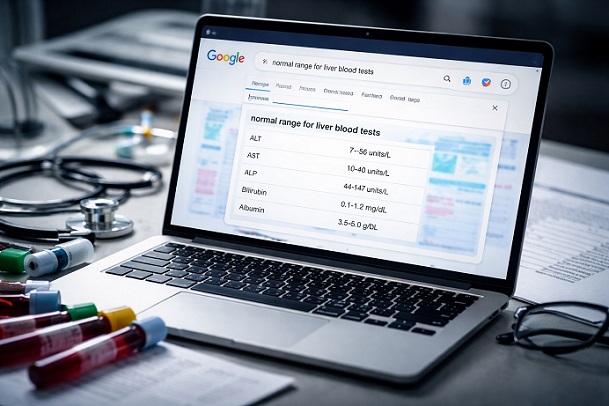

The investigation found that the tool provided fabricated reference values for critical blood examinations linked to liver health. These figures were neither medically validated nor accompanied by safety notes doctors say are required to interpret such tests properly. In multiple cases, the automated summary listed ranges that might lead sick individuals to falsely believe they were healthy and avoid follow-up care. Specialists described the errors as “dangerous” and “alarming” because they risk masking conditions such as cirrhosis or autoimmune hepatitis.

Liver Test Numbers Found to Be False and Misleading

Typing straightforward search queries including “what is the normal range for liver blood tests” yielded sprawling lists of numerical values. These were presented without caveats on patient sex, age, ethnicity, or even the fact that many countries use differing standard ranges. Physicians stress that interpreting liver function tests, known as LFTs, is inherently complex. They comprise multiple biomarkers such as ALT, AST, ALP, bilirubin, and albumin. Doctors often rely on trend patterns and combinations rather than single datapoints.

Experts warned the Google outputs could not only be medically wrong but dangerously reassuring. The British Liver Trust highlighted that people with advanced liver disease frequently register values within normal thresholds. Without proper clinical follow-up, early symptoms can be overlooked until damage becomes irreversible. The absence of qualified warnings or contextual language therefore created a situation where vulnerable users could incorrectly self-diagnose themselves as clear.

Google Removes Specific Queries but Critics Say Too Little Too Late

Following publication of the findings, Google quietly removed AI Overviews for search terms referencing liver function blood ranges. The company stated it does not comment on individual takedowns but confirmed action is taken when content violates policies or lacks required context. A spokesperson stressed that AI Overviews are designed to be helpful and reliable and that broad improvements are underway.

Yet campaigners argue the fix barely scratches the surface. They tested slight variations of the original questions, such as “LFT reference range” or “LFT test reference range,” and still received the AI summaries. The numerical values again appeared in bold with no cautionary explanation that they may not apply to that user’s clinical situation. Critics say this proves the tool cannot be trusted

for health advice and that Google is focusing on whack-a-mole suppression rather than systemic safety.

Patient Groups Warn Trust in Information Is Eroding

Patient groups emphasize that millions of people already struggle to locate verified healthcare sources online. Sue Farrington, chair of the Patient Information Forum, said that Google’s dominant position means its responsibilities are greater than ever. With more than nine in ten global online searches flowing through the platform, small errors can scale into worldwide harm.

Farrington called the removal a positive first move but warned that widespread inaccuracies persist in cancer and mental health searches. The Guardian had highlighted multiple dangerous errors in these categories, yet Google declined to disable them, arguing that the summaries linked to reputable websites and advised when professional care was needed. Internal clinical reviewers reportedly backed that assessment, though external experts strongly disagreed.

A Growing Debate Over AI Reliability in Search

Technology analysts note that AI Overviews appear above traditional ranked results, which reshapes the nature of search itself. Users conditioned to trust Google tend to treat the top box as authoritative. As a result, misleading figures are granted implicit legitimacy. Specialists say this

Medical News report underscores broader concerns about AI hallucinations, fabrication of facts, and opaque sourcing.

Google counters that AI Overviews only surface when the system has high confidence in response quality and says it continuously evaluates summarization performance. Critics respond that confidence levels are meaningless if the model interprets confidence incorrectly. Unlike conventional search results, AI outputs may combine information from several sources or interpolate missing data, meaning an incorrect number can emerge that exists nowhere in medical literature.

Public Health Stakes Are Rising as AI Expands

Healthcare charities stress that liver disease cases are rising and many sufferers are unaware until late stages. If even a small fraction of patients postpone treatment because a search suggests false reassurance, the cumulative harm could be profound. Experts say online guidance must always emphasize that blood tests require medical interpretation and that personal symptoms, history, and imaging results often outrank simple numerical values.

AI researchers suggest a more transparent architecture including explicit disclaimers, source lists, and stronger safety filters for clinical terms. Others call for formal regulatory oversight similar to medical device approval. Google, however, has not disclosed whether it intends to apply different risk standards to health topics than to entertainment or cooking queries.

What Happens Next

The episode illustrates the collision between fast-moving generative models and slow-moving scientific certainty. While AI search summaries may prove helpful for restaurant recommendations or trivia, they are currently unsuited to serve as a substitute for clinical support. Failure to recognize that dividing line could imperil patient lives and corrode trust in platforms relied upon by billions.

Experts argue the burden now falls squarely on Google to institute guardrails that prevent automated misinformation from slipping into critical health categories. Without clear safeguards and specialist review, users may continue to receive answers that appear authoritative yet are wrong in ways that harm. As AI capabilities expand, so too must accountability, monitoring, and transparency across tech companies that distribute information at global scale. For genuinely safe digital healthcare ecosystems, platforms must respect the complexity, uncertainties, and individual variation inherent in medicine. Health professionals warn that until that happens, users should treat AI health tidbits with extreme caution and always consult trained clinicians before making decisions that could affect their wellbeing.

Media References:

https://www.theguardian.com/technology/2026/jan/02/google-ai-overviews-risk-harm-misleading-health-information

https://futurism.com/artificial-intelligence/google-ai-overviews-dangerous-health-advice

For the latest on health misinformation from AI platforms and chatbots, keep on logging to Thailand

Medical News.